I see this claim appear in various forms: relational algebra is math, is based on math, comes from math, has strong mathematical foundations.

It's a statement that I struggle to assign any precise meaning to, but typically the intent seems to be something like:

- Relational algebra contains some sort of fundamental truth - discovered rather than invented.

- Relational algebra is powerful because of it's special math sauce.

- Relational databases are superior because they are 'based on math' and other database models are not.

I encourage mentally replacing the phrase 'based on math' with 'based on a true story'. This usually provides the correct intuition.

In mathematics, relations are sets of tuples of values. They are usually binary (only two columns) and often contain infinitely many tuples.

They're typically manipulated using set-builder notation, or treated as binary operators or functions. Some examples:

\[ <_{AC} \;= \{\;(a,c) \mid (a,b) \in \;<_{AB}, (b,c) \in \;<_{BC} \}\\ \;\\ a <_{AC} c \;\Leftrightarrow\; a <_{AB} b \;\land\; b <_{BC} c\\ \;\\ <_{AC} \;=\; <_{AB} \circ <_{BC} \]

There isn't much to be said about the properties of relations in general. They're mostly used as a building block for talking about equality, ordering, functions etc. The 'theory of relations' in mathematics exists to about the same extent that the 'theory of structs' exists in C - they're just a common way to structure data.

Relational algebras are a niche branch of formal logic, popularized by Tarski, that use an algebra over binary relations as a point-free alternative to (a subset of?) first-order logic. They aren't widely used. For example, I'd be surprised to see it mentioned in an undergrad-level course.

In databases, relational algebra is a somewhat fuzzy term.

In the relational algebra originally defined by Codd, relations are finite sets of records with named fields. Relations are manipulated using a small set of functions. Some of those functions (eg union, intersect, product) are based on similiar functions in set theory, with some tweaks to prevent duplicate field names and avoid nesting tuples. Others (eg rename, select) are unique to databases (at least in terms of notation and vocabulary - of course the operations can be defined mathematically).

\[ AC = \Pi_{a,c} ( \sigma_{b=b2} \; (AB \times_{} \rho_{b_2/b}(BC)))\\ \]

Codd's relational algebra bears some superficial resemblance to Tarski's relational algebras. Both are ways of expressing first-order logic using functions over sets. But the point of Tarksi's relational algebra is to avoid free boolean variables, whereas Codd's relational algebra uses arbitrary scalar formulae in the select function. And Codd's relational algebra is concerned with computability rather than axiomatization eg providing a difference function instead of a negation function to prevent the creation of infinite relations.

All of which is to say that Codd's relational algebra is not something that is actually used by mathematicians, nor does it benefit from any deep connection with existing bodies of mathematical theory. It's a novel set of operations created to satisfy the needs of 1970s database engineers.

It's main feature is that it's able to express a variety of queries while still being easy to implement efficiently. The relational calculus is often much nicer for the programmer, but it's not immediately obvious how to execute or transform it. The relational algebra, by contrast, has a fixed set of operations over large collections, which lends itself to rewrite-based optimization and to amortizing the overhead of an interpreter. Codd's theorem shows that we can compile the relational calculus to the relational algebra to get the best of both worlds.

So think of Codd's relational algebra not as some deep mathematical truth, but as a particularly nice compiler IR for queries over relations.

Anyway, Codd's relational algebra isn't what modern relational databases are actually based on. We have to add:

- Sorting and limits.

- Aggregation, grouping, windowing, partitioning.

- Multisets instead of sets (in part because aggregation over sets is awkward - you have to carefully preserve enough columns to prevent collapsing duplicate values).

- Nulls and three-valued logic (inconsistently).

- Relation variables (CTEs).

- Fixpoints/iteration (recursive CTEs).

- A scalar expression language with functions, collections and errors (

select 1/0).

Most databases also have:

- Some kind of

subqueryorapply-joinoperation to handle subqueries, lateral joins, IN/EXISTS, UNNEST etc (breaking the separation between relational and scalar expressions). - Functions which take accept relation and return relations.

- An embedded procedural language.

None of this could be expressed in the original relational algebra, and once you add it all it's not obvious we should even still be calling this an algebra, let alone granting it any mathematical mystique. I'd settle for calling it the 'sql calculus'. Or 'the algebra formerly known as relational'.

It is still a reasonably good compiler IR though, and that's still the most useful way of thinking about it.

Maybe we meant that the relational model is math, rather than the relational algebra?

But the relational model isn't particularly mathematical either. Multisets over records with named fields are not a common sight in math papers. Foreign keys are a compromise for compiler legibility - a mathematician would just let column types range over arbitrary sets instead. The various normal forms are useful design heuristics but they aren't very rigorously defined (eg is a string a composite value?).

As an aside: I often see claims that relational databases owe their performance to the power of the relational model and how easy it is to optimize. This is funny (in a traumatized kind of way) - writing a usable query optimizer is an immense amount of work. When the relational model was first proposed, the main argument against it was that it would never produce acceptable performance compared to eg hierarchical databases. It took at least a decade to prove that wrong, and even today engineers still resort to denormalization when struggling with performance. The relational model is very much succesful in spite of it's performance challenges.

So if we can't say that relational databases are 'based on maths', can we at least say that they have a mathematical description? Sure, but the trouble is that pretty much anything can be described in mathematical terms.

For example, there is a whole family of xml query algebras, many of which do a much cleaner job of traversing the scalar/collection divide than the hodgepodge of subqueries and UNNESTs used in relational databases today. So if relational databases are math then xml databases are even more math.

Unlike xml query algebras, the algebra-formerly-known-as-relational isn't defined anywhere at all. Every relational database uses it's own internal planning language with their own menagerie of operations and subtly different semantics. Since those planning languages are (by design) not user-facing they're also free to change from version to version.

Even the sql spec doesn't use relational algebra. Or any formal model at all. I'm hard pressed to even call it a spec - my copy of chapter 2 of the sql 2016 spec has 994 occurences of the phrase "implementation-defined" and 361 occurences of "implementation-dependent" and those are just the places where they noticed that the spec wasn't complete.

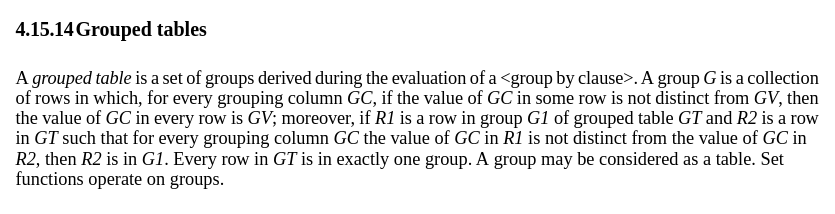

By way of example, here is the tail end of the spec for GROUP BY:

What exactly is a grouped table?

Not a particularly rigorous definition, but it's also demonstrating that to give a composable semantics to sql we actually end up needing data-structures other than first-order relations - relational algebra is not a good fit.

The closest I've seen to a mathematically rigorous model for sql is this 2017 paper which only covers a tiny subset of the language, excluding eg GROUP BY. (Incidentally, I'm 70% certain that they simply forgot to handle correlated variables in their proof of prop 2, making the scope of the paper even smaller).

To summarize:

- Codd's relational algebra:

- Was invented in the 70s for the needs of relational databases.

- Is not used by mathematicians.

- Differs in many important ways from the mathematicians notion of a relation.

- Doesn't come with any theorems which are useful for database engineers.

- Is not expressive enough for use in relational databases.

- The relational-ish sort-of-algebra used in query planners:

- Varies between databases, and between successive versions of the same database.

- Is significantly more expressive than Codd's relational algebra.

- Has no meaningful relation to first-order logic.

- Usually isn't an algebra - there is almost always an operator like

subqueryorapply-jointhat mixes the scalar and relational layers.

- SQL:

- Has no usable formal definition, let alone in terms of anything resembling Codd's relational algebra.

- Can't be reduced to Codd's relational algebra.

- Is difficult to even describe without using nested relations.

None of this is itself a criticism of relational databases! The relational model has many useful properties for engineers:

- Using ids instead of pointers or nested structures makes it substantially easier to provide efficient atomic updates and to write queries that follow access paths that weren't anticipated during schema design.

- Modelling constraints separately from data avoids the need to rewrite queries when constraints are added or removed. (Compare to a typical programming language, where changing something from a list to a hashmap requires rewriting most of the code that touches it.)

- Avoiding ordered collections by default makes it possible for query optimizers to dramatically reorder computation.

- The relational-ish sort-of-algebra used in query planners is well-suited to amortizing interpreter overhead, allowing simpler implementations.

There is no need to make vague appeals to a totally different field in order to justify liking it!

Find more unexplanations here.